The History and Evolution of WebAssembly in Kubernetes

Matt Butcher

Matt Butcher

spin

kubernetes

webassembly

cloud

Webassembly and Kubernetes Done Right

Kubernetes (K8s) is the established container orchestration solution. From data centers to pools of devices, it has shown itself nearly ubiquitous. Every major cloud provider offers hosted K8s, yet it can be conveniently run on a small cluster of Raspberry Pi’s. K8s has lived up to its initial promise: Bring container orchestration to everyone.

WebAssembly (Wasm) has taken a different path to success. Originally conceived as a way to extend Web browser language support beyond JavaScript, it has outgrown its initial promise—by a lot. BBC and Amazon use Wasm in their embedded streaming players. Prime uses Wasm to increase stability and speed when updating its app for more than 8,000 device types. Shopify makes commerce extensible with Wasm. Envoy Proxy uses Wasm as a plug-in framework. And, Disney Streaming use Wasm in their Disney+ Application Development Kit (D+ ADK).

To us, the most exciting application of Wasm is on cloud and edge. To do that well, Wasm needs to run inside of K8s.

Why Wasm on the Server Side?

There are three characteristics that Wasm has, because of its browser heritage, that are highly desirable for a server-side platform:

- A strong security sandbox

- Multi-arch, multi-OS, and multi-language support

- High performance and efficiency during execution

The browser requires a security sandbox to prevent a security breach from a Wasm binary downloaded off the Internet. On the cloud (and edge) side, this same requirement is necessary. Just as is the case with virtual machines (VMs) and containers, Wasm binaries must be able to execute in a multi-tenant environment without posing a risk either to the host or to other guests running on the same service.

The browser also requires that a single Wasm binary be able to run on any operating system and CPU architecture that a browser supports. In the Wasm world, dozens of languages (including biggies like JavaScript, Python, Go, C/C++, and Rust) can compile to Wasm, and then run on any platform. On the server side, this is a fantastic boon, because developers themselves do not need to target the specifics of a production environment. They simply deliver the application, and platform engineers can deploy it onto whatever operating system and architecture combo they see fit (we like to demo this by deploying an application into a mixed K8s cluster with Arm and Intel worker nodes).

Finally, in the browser space, performance is paramount. Website visitors are fickle and impatient. Consequently, Wasm needs to download quickly, cold-start in the blink of an eye, and remain performant during the entirety of a user’s visit. Likewise, it must consume few resources. None of us wants a webpage taking up gigs of memory just to paint a form on the screen!

And this performance point is what sets Wasm apart on the cloud. For workloads like serverless functions, event-driving architectures, and steps in a processing chain, we want our code to cold start instantly, consume as few resources as possible, and execute at near-native speeds. Wasm is the only technology that fits this bill while still providing a robust sandbox and tremendous language, architecture, and OS support.

There are, of course, many ways to run Wasm binaries. Spin, the developer tool for building server-side Wasm can run in a wide array of environments, from embedded systems to large Nomad clusters. The most exciting environment, though, is K8s.

The Road to Running Wasm in K8s

Most of us at Fermyon have a deep background in the container and K8s space. We’ve been part of the CNCF Technical Oversight Committee (TOC), contributed and maintained many projects, and even written some children’s books on the matter. Even years ago, we understood how important it was to run Wasm inside of K8s.

We knew that for Wasm to be successful inside of K8s, it would be an anti-pattern to merely package up a Wasm runtime inside of a container, and deploy that. Doing this simply obviates every single reason to run Wasm – start-up time is slow, you’re locked into a particular OS and operating system, and there is no clear way to attach external services to the Wasm file without first proxying them through the container layer. So from the beginning, we set our sights higher: we wanted to run Wasm inside of K8s. Not on top of it.

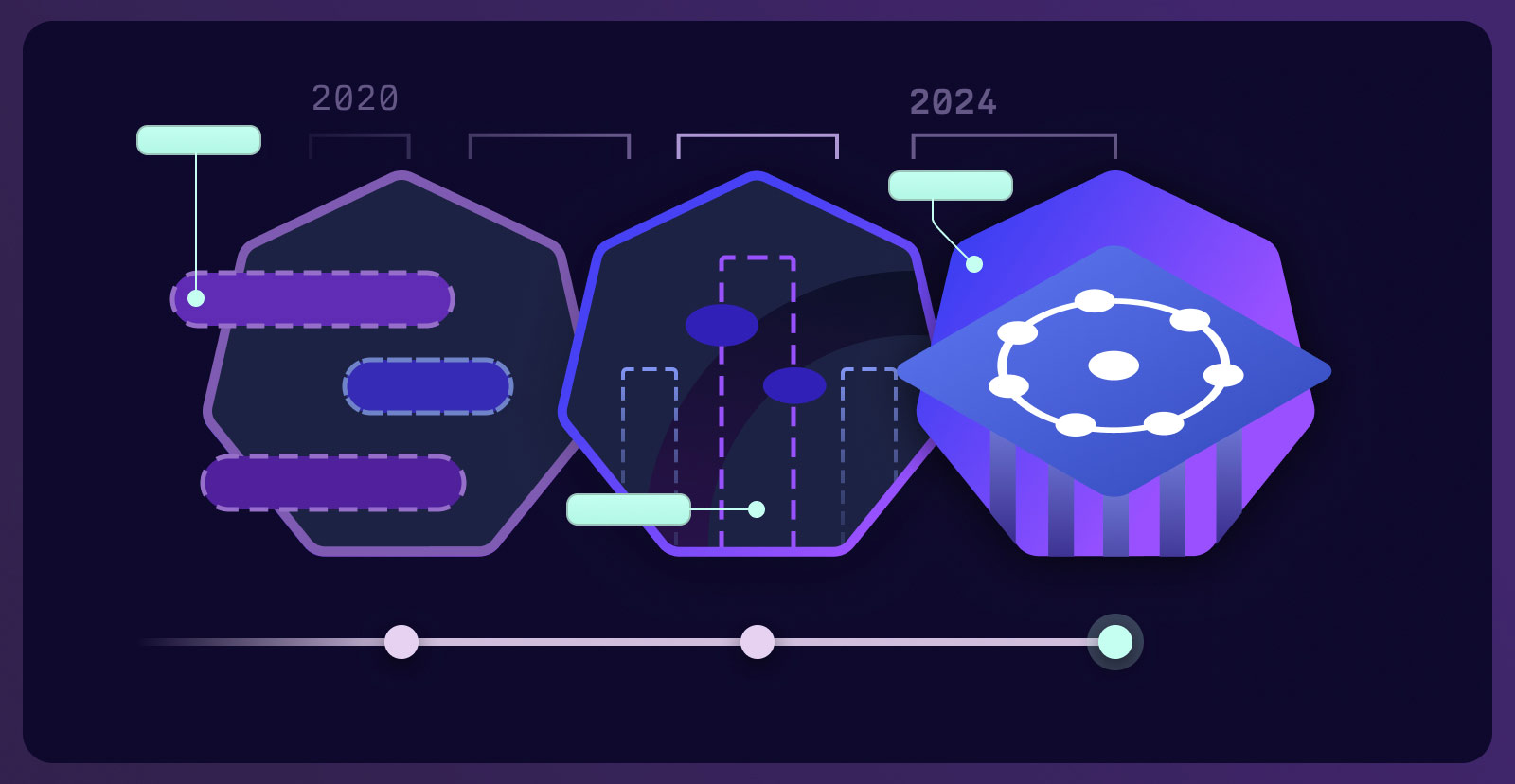

Our first two attempts were not so successful, though.

Back in 2020, those who would go on to become the Fermyon founding team built an (unreleased) experimental Wasm runtime for K8s called WOK (Wasm On K8s). The idea, at the time, was to replace containerd with a Wasm runtime. We made it a few months into this project before realizing that the scope was huge.

That experience, combined with some work we did with Virtual Kubelet, inspired us to try a second approach: Virtualize a Kubelet worker which runs Wasm. Krustlet (a mangled portmanteau of Rust Kubelet) worked reasonably well for running Wasm binaries, but had a major limitation: It did not integrate well into any of the rest of the K8s ecosystem. Everything from volume mounts to service meshes was simply not available to a Krustlet application. Over time, we came to realize that once again we had bitten off more work than we could manage.

The old saying about the third time being the charm certainly held up here. While we were building Spin, Microsoft started a project called runwasi, which took the sensible approach to support Wasm inside of containerd by writing a containerd Shim. This meant that one could simply write a regular Pod specification, with all the usual bells and whistles, and have some or all of the images reference Wasm binaries instead of containers.

With this tool and several others (including the awesome KWasm project), Microsoft, Fermyon, SUSE, Liquid Reply, and others were able to put together an awesome K8s toolkit: SpinKube.

Why We’re Excited About Spinkube

SpinKube provides all of the tools you need to run Spin Wasm applications inside of your K8s cluster. It works on every major K8s distribution, and from data center to Raspberry Pi (and probably smaller), it’s got you covered.

What makes SpinKube so special? Serverless functions have been around for a decade. While developers have made it clear over and over again that Lambda-style functions are both faster to write and easier to maintain, the runtime solutions for serverless have always been cobbled together from ill-suited technologies. K8s has seen a share of serverless platforms — Knative, OpenWhisk, Fn Project, and OpenFaaS — but in all cases, they were based on container technologies that made them slow and resource-hungry. In one recent conversation I had with an OpenWhisk user, they noted that it takes 37 seconds to cold start a single function. Contrast that with Spin, which comes in at under half of a millisecond.

Research suggests that the average K8s node runs only 30 containers. Since each container is long-running, resource-hungry, and scoped to peak resource consumption, the size (and cost) of a K8s node starts to rise with density.

SpinKube does not suffer from this problem. In fact, it is trivial to deploy 250 apps per node in a SpinKube cluster. Whether you have that many apps or not, the main point is that serverless-style Wasm is far more efficient to operate than containers in your K8s cluster.

Add to that Spin’s “blinking cursor to deployed application in two minutes or less” developer story, and it becomes clear why SpinKube can turn into a major boon both for developer and operations teams.

One of the best parts about SpinKube, though, is that Spin applications are deployed directly into K8s nodes alongside containers. This means you can:

- Run containers as sidecars to Wasm (or vice versa)

- Mount volumes, secrets, and config maps to a Spin app

- Use your service mesh, sidecars, telemetry, and other existing K8s services

- Continue to manage your policy control and security the same way you always had

- Use your K8s monitoring and dashboard systems unaltered

But if you’re really interested in efficiency and performance, there’s one more chapter to this story.

Fermyon Platform for Kubernetes Brings Hyper-Elasticity to You

In 2022, we built Fermyon Cloud, our hosted developer-centered cloud service. The goal was to make it trivially easy for a developer to deploy (for free!) a Spin application into a highly stable production environment.

At the time of this writing, a single node in our own Fermyon Cloud cluster hosts 3,000 user-supplied applications.

We can scale from zero instances of an app up to tens of thousands of instances in under a second. And we’re accomplishing all of this on AWS 2xl-sized VMs! Fermyon Cloud is downright cheap to operate and enables us to have a very generous free tier.

We wanted to bring that density to you.

So we took the engine inside of Fermyon Cloud — the massively multi-tenant Wasm sandboxing environment called Cyclotron — and we combined it with SpinKube to create our enterprise offering: Fermyon Platform for Kubernetes.

Fermyon Platform for Kubernetes is an excellent choice for enterprises that are already invested in K8s, and want to bring Wasm into the fold alongside existing container workloads. Along with the hyperscaling Cyclotron runtime, we provide several additional operational features as well as support, training, and a trustworthy packaging and delivery system.

Fermyon Platform for Kubernetes is great for:

- Hosting workloads that need to scale up rapidly — even if unpredictably (as in the case of trending articles or sudden sales surges)

- Hosting a large number of serverless functions

- Providing a multi-tenant system with strong performance or density needs

- Managing sophisticated pipelines such as ETL workloads

- Replacing Lambda, Azure Functions, Google Cloud Functions, or other similar “not in K8s” serverless architectures

- Amping up the performance and scalability of workloads that once ran on OpenWhisk, OpenFaaS, Knative, or Fn Project

Conclusion

K8s was originally thought of as a container orchestrator. And Wasm was originally a browser technology. Good technologies meet their design intent. Great technologies exceed them. And both of these have proven to be great technologies.

SpinKube and Fermyon Platform for Kubernetes each provide an excellent way to draw on the power of Wasm to build ultra-high-performing serverless functions that can run side-by-side with your existing containerized applications in K8s.

If you’d like to get started, book a demo so that our team can walk you through scaling and delivering your applications with dramatic gains in performance while reducing your cloud costs.