Lightweight Kubernetes and Wasm is a Perfect Combo

Matt Butcher

Matt Butcher

kubernetes

devops

webassembly

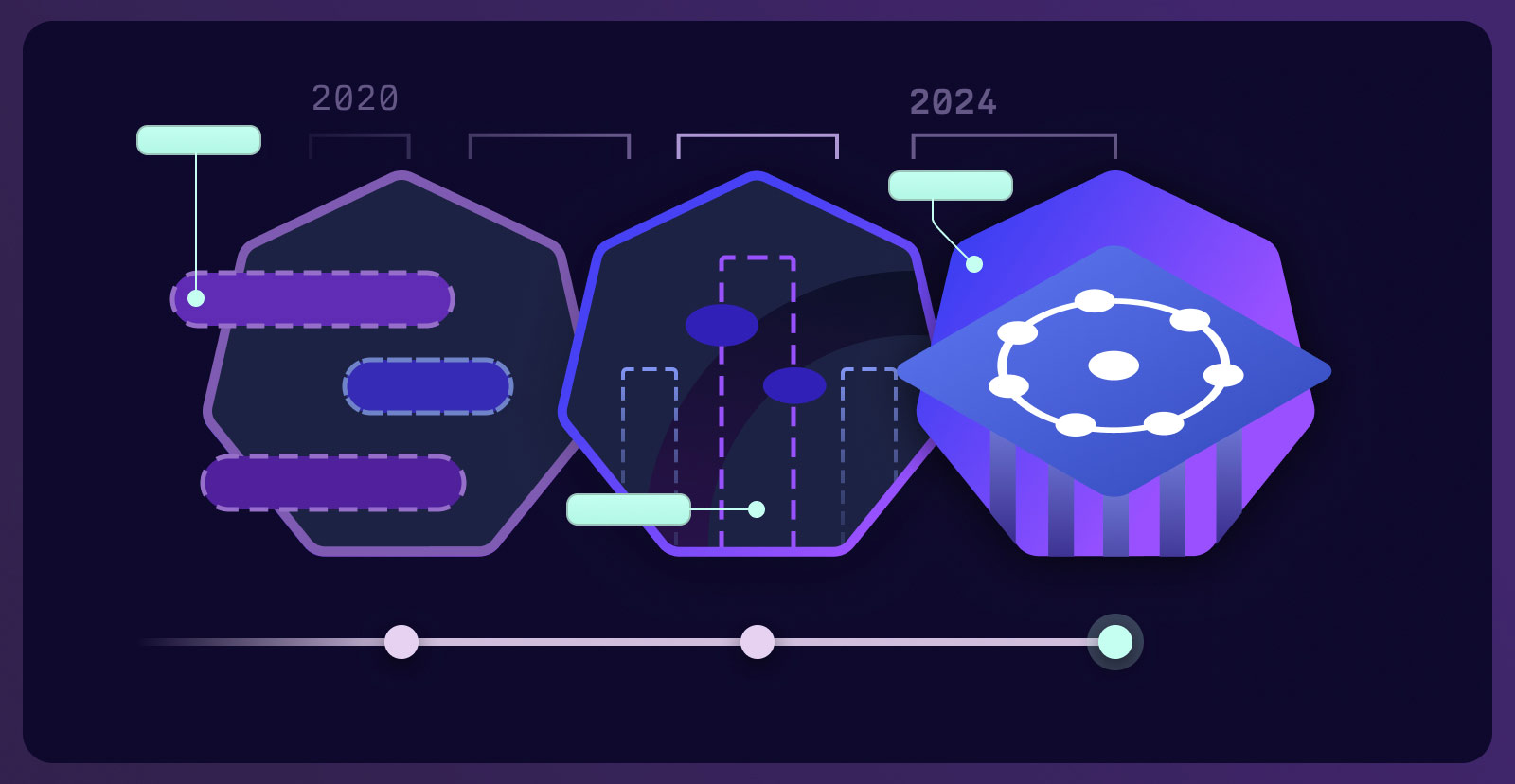

In the last few years, we’ve witnessed the introduction of several new lightweight Kubernetes distributions. SUSE’s Rancher Labs k3s project was one of the earliest. Canonical now includes Microk8s in Ubuntu. And k0s is a single-binary Kubernetes distribution.

Earlier this year, we launched a project called SpinKube that makes it easy to run Spin applications inside of your Kubernetes cluster. One of the cool things about WebAssembly apps is that they are much smaller than containers, and Spin apps in particular require very little runtime overhead because they use the Serverless function design pattern.

So if you’re really trying to squeeze the most out of your lower-powered hardware or virtual machines, running a lightweight Kubernetes distribution in conjunction with SpinKube can be a powerful combo. At KubeCon Paris, we showed how we could run 5,000 Spin applications on a k3s instance.

Use Cases

Running 5k apps is cool… but for most of us we don’t actually have that many apps to run. So why would I want to use this combo of a tiny Kubernetes and a high-performing SpinKube? We’ll dive into three use cases, but first let’s highlight the characteristics hidden behind the performance numbers:

- Because Spin apps can start in half a millisecond, responses are very fast. That means front-line web services can benefit from Spin’s speed

- Because the Wasm sandbox is very light (compared to VMs and containers), tens of thousands of sandbox instances can be started on even modest hardware.

- Because Spin apps run for only milliseconds, seconds, or minutes, their resources are freed very quickly.

So why would it be so important to run at such a high density? There are a few possible scenarios.

1. Stack infrequently used servers on a cheap VM

One big class of “Kubernetes vampire” is the infrequently used application. We all have them. These are the applications that provide some level of supporting service, like an occassionally used dashboard or a rarely accessed API server. They may handle a few thousand, a few hundred, or even a dozen or so requests per day. But they’re running all the time, and that means they’re consuming CPU, memory, ports, and other precious compute resources.

This is part of the reason that recent research suggests that 83% of container costs are for running mostly idle services. A friend of mine who works for a media company told me recently that their clusters show 100% provisioned (i.e. all of the cluster resources are “spoken for”) even while the CPU sits 80% idle. All the resources are claimed, even though their not doing anything most of the time.

The solution to this is to make such services into Spin apps. Then they’re only running (and consuming resources) when handling requests. You can pack lots of these utility services onto a cheap and small node in your Kubernetes cluster and free up a boatload of system resources for your other containerized services.

2. Make high-traffic sites much cheaper

Spin also excels at the opposite end of the spectrum. Spin apps scale per-request. that means that when no requests are coming in, there are zero instances of the app running. When 10k requests concurrently arise, then 10k instances of the Spin app will be started.

This is where that 0.5 millisecond feature shines. Spin can scale up literally 3 orders of magnitude faster than you can blink your eye.

Even with high traffic websites, there are patterns to traffic, where certain times of day experience much more traffic than at others. The basic pattern tends to be wave-shaped, with peaks in the target market’s midday, and troughs in the middle of the night. For some sites, weekends show big lulls, while others peak during the weekend. And as we at Fermyon know from experience, when a piece of content trends on social media, the resulting traffic spikes may be 100x or even 1000x above regular traffic levels.

The traditional way of dealing with these patterns is simply to provision for the peaks, and accept the fact that during troughs you are operating (and paying for) idle services. Autoscalers may be used to handle load in some cases, but most of the time they are slow (in the order of dozens of minutes) before the scale-up event can be achieved.

Because of the fast cold start, though, Spin apps can scale instantly. And even when we’ve trended on Twitter and Hacker News, we’ve never had to add even a single extra node to handle the load. Spin is just that efficient.

If you’ve got a small number of frontline services, some of which are high traffic. Running SpinKube on a small-footprint Kubernetes distribution often results in lighter cluster requirements while still handling large amounts of inbound traffic.

3. Push apps out to the edge, and run cheaper

Performance isn’t all about CPU and memory. Often times optimizing performance involves cutting network latency to the bare minimum. And that means moving compute workloads from a centralized data center or cloud provider to the edge. Yet edge computing power is often limited. Running full Kubernets and containers will either be impossible (because the compute power is not there) or very expensive (because scarcity means higher prices).

Running the combination of lightweight Kubernets and SpinKube can solve this problem as well.

I recently experimented with a frontline web service on Akamai’s Gecko (Generalized Edge Compute) environment. I installed k3s (and also Microk8s to test) along with SpinKube on the smallest footprint edge node Akamai provides. I was astonished to observe the speed of traffic between my home office and an edge node. The full round trip was taking 30 milliseconds per request. The request was originating from my home, traveling to the datacenter, where a Spin app started, ran to completion, and returned a result, which was then transported back to my house… in 30 milliseconds. When testing against a Spin app running in Amazon’s us-west DC, it took 60 milliseconds just to connect to the remote host. Even the fastest application will be perceived as slow when the network does not perform.

Early edge apps were limited to simple tasks like munging headers or intelligently routing requests upstream. But when you can run Spin at the edge, you can push far more sophisticated app logic to the edge without taking a performance hit or incurring big cost.

Getting Started

One more nice thing about lightweight Kubernetes distributions is that they are usually very easy to test locally. So if you’re ready to try out SpinKube on tiny Kubernetes, you can give it a test run on that quiet Friday afternoon.

The official SpinKube docs cover using k3d (a k3s-in-Docker tool) as well as installing into Microk8s. In most other cases, the instructions for installing with Helm will get you where you need to go.

From there, you can get started building Spin apps. If you hit any snags, just ping us on Discord. We’re always here and happy to help.